Note: those marked with an asterisk I am not 100% confident in my answer/reasoning.

Please feel free to discuss the problems and my proposed solutions below!

Quiz

P1

Let S’ be the optimal min-size subset of vertices and S be the subset returned by the greedy algorithm. Since edges were selected with no common vertices prior to addition to S, none of the edges in S contain common endpoints. As such, in order for S to be a vertex cover of G one only need one endpoint of each edge that was added to S and this ends up halving the total number of endpoints in S. Therefore, |S| ≤ 2 * |S’|.∎

P2

This problem is taken directly from Cormen et al’s Introduction to Algorithms 3rd edition, Theorem 35.4 and Corollary 35.5. The proof for Theorem 35.4 is rather lengthy and involved so I direct anyone reading this to refer to that text for a full explanation to this problem. I understand and believe the proofs presented in the text, but I am unable to explain in layman’s what it means nor do I want to make the incredible effort of re-proving it.

P3

Let C* be an optimal solution pair of sets from the collection of S1, S2,…, Sm. Denote the solution pair as Si and Sj, chosen in that order. Note then that |Si| ≥ |C*|/2 because otherwise Sj would have been picked first instead. Denote the elements in C* that are not already in Si as x. The second subset Sj must then contain half of the elements in x, otherwise either some other subset in the collection, say Sk, would have been the second selection or x is not actually as large as originally assumed. If we assume |Si| equals its lower boundary limit of |C*|/2, then x is also |C*|/2. And since Sj must contain half of x’s elements, |Sj|’s lower boundary limit will have to be half of |C*|/2, or |C*|/4. This means the overall lower limit of any sub-optimal solution from the algorithm will have to be |C*|/2 + |C*|/4 = 3|C*|/4.∎

P4

This problem is taken directly from Kleinberg/Tardos’ Algorithm Design, Theorem 11.3. Please feel free to refer to that text for more in depth analysis.

Let A = average load across the m machines, (Sum job times)/m, and B = maximum job time. Denote T’ as the optimal makespan, and note that T’ ≥ A and T’ ≥ B. Since the jobs are added as they are read, the last job determines the makespan so we will analyze the last job to be added with tj process time. Denote the last machine to receive the last job as Mi and its total load after receiving the last job as Ti. This means its load prior to receiving job tj was Ti - tj. Additionally, since it was the last machine to receive a job, this means its load was the smallest of all the other machines. As such, each machine had a load at least Ti - tj. Summing these all up, we get Sum(T’s) ≥ m(Ti - tj), or equivalently: Ti - tj ≤ A. Recall that T’ ≥ A, so this means that Ti - tj ≤ T’. And since T’ ≥ B, we know that T’ ≥ tj which then implies: Ti = (Ti - tj) + tj ≤ 2T’. Or just T ≤ 2T’. ∎

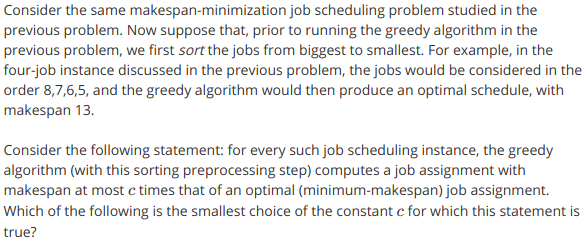

P5

Once again, this problem is taken directly from Kleinberg/Tardos’ Algorithm Design, Theorem 11.5. Please feel free to refer to that text for more in depth analysis.

Firstly, if there are more than m jobs, then T’ ≥ 2tm+1. Note that the jobs are in decreasing sorted order. So every job up to the m+1 job will be at least tm+1. Since there’s m machines and m+1 jobs, one machine will have two jobs (pigeon hole). The machine with two jobs will have a total processing time of at least 2tm+1. Since jobs were added in decreasing order, we can then presume T’ ≥ 2tm+1, or 0.5 T’ ≥ tm+1

Now we follow a proof similar to the one used in problem 4; let’s assume machine Mi is the next machine to receive a job and it has at least two jobs assigned to it already. An incoming job, j, will have tj ≤ tm+1 ≤ 0.5 T’. Now in following the proof from problem 4, we get Ti - tj ≤ T’. Since tj ≤ 0.5 T’, we can then conclude Ti ≤ 1.5T’.∎

Programming Assignment

For this programming assignment we look at the TSP again, but this time with a much larger data set (37000+). This time around the exponential deterministic algorithm from last week will not cut it, so we must make use of an approximate polynomial-time algorithm, which is the following:

- Start the tour at the first city.

- Repeatedly visit the closest city that the tour hasn’t visited yet. In case of a tie, go to the closest city with the lowest index. For example, if both the third and fifth cities have the same distance from the first city (and are closer than any other city), then the tour should begin by going from the first city to the third city.

- Once every city has been visited exactly once, return to the first city to complete the tour.

The data points are already in order by ID number (1, 2, 3,…), but are also, interestingly, in order of increasing x coordinate. Using the triangle inequality theorem, we can gain a computational advantage. Instead of having to compare a point to all potential points, we only need to compare points until the minimum recorded distance so far is less than the difference in x coordinates. With this optimization, the following code can run in about 6 seconds:

C++

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

void solvePart4Week3Q1(){

//Open up file of data point data

string filename = "/home/nathan/Programming/OSSU/Core_Theory/"

"Algorithms-Roughgarden/Part4/Week3/nn.txt";

ifstream infile(filename);

string line;

//read in number of nodes

getline(infile, line);

int numberOfNodes = stoi(line);

//create and populate array of data points

vector<dataPoint*> V;

double x,y;

int id = 1;

getline(infile, line);

vector<string> coors = split(line, ' ');

x = std::stod(coors[1]);

y = std::stod(coors[2]);

dataPoint start(id, x, y);

id++;

start.setPrevious(&start);

//Read in data point values and store in V.

while (getline(infile, line) ){

vector<string> coors = split(line, ' ');

x = std::stod(coors[1]);

y = std::stod(coors[2]);

V.push_back(new dataPoint(id, x, y));

id++;

}

cout << setprecision(4) << fixed;

cout << "Size of V: " << V.size()

<< " Expected: " << numberOfNodes-1 << endl;

cout << start.getPrevious()->getID() << endl;

//initialize distance, d

double totalPathDistance = 0;

double dx;

double minSD = 1000000000; //min square distance for current iteration

double tempMinSD;

int currNodeComp = 0; //number of comparisons made with current node

int totalComparisons = 0; //counts total comparisons made.

int nodesProcessed = 0;

int nextIndex;

//while V is not empty

while(! V.empty()){

currNodeComp=0;

for (int i =0; i < V.size(); i++){

currNodeComp++;

tempMinSD = V[i]->squareDistance(*(start.getPrevious()));

if ( tempMinSD < minSD){

minSD = tempMinSD;

nextIndex = i;

}

//datapoints happen to be sorted by x coordinate...

dx = (V[i]->getX() - start.getPrevious()->getX());

if (minSD < dx*dx){

break;

}

}

totalComparisons += currNodeComp;

start.setPrevious(V[nextIndex]);

V.erase(V.begin()+nextIndex);

totalPathDistance+=pow(minSD,0.5);

nodesProcessed++;

if (nodesProcessed % 1000 == 0)

cout << nodesProcessed << ": " << V.size()

<< " remaining, with distance of " << totalPathDistance

<< ", broken at " << currNodeComp << " when the minDN was at: "

<< nextIndex << endl;

minSD = 1000000000;

}

totalPathDistance += start.distance(*start.getPrevious());

//print d

cout << (int)totalPathDistance << endl;

cout << "total comparisons: " << totalComparisons << endl;

}

Strangely, a vector data structure performs better than a list data structure. I would think everything would be comparable aside from the deletion of elements, which list should perform better with as there’s no need to shift contiguous data as with the vector (I think?). But, vector outperforms the list in this algorithm somehow…